New Benchmark Results: iCon Modular AI Hits Top Scores

humanity.ai's modular AI beats leading models on key benchmarks, delivering superior reasoning with 300B parameters, all running on MacBooks, not datacenters. Hardware-efficient, private, and fully scalable.

The journey toward human-level general intelligence requires not just bigger models, but fundamentally better architectures. With humanity.ai, we're building precisely that, utilizing our iCon modular AI architecture—a system purpose-built to overcome the structural weaknesses of LLM-based models.

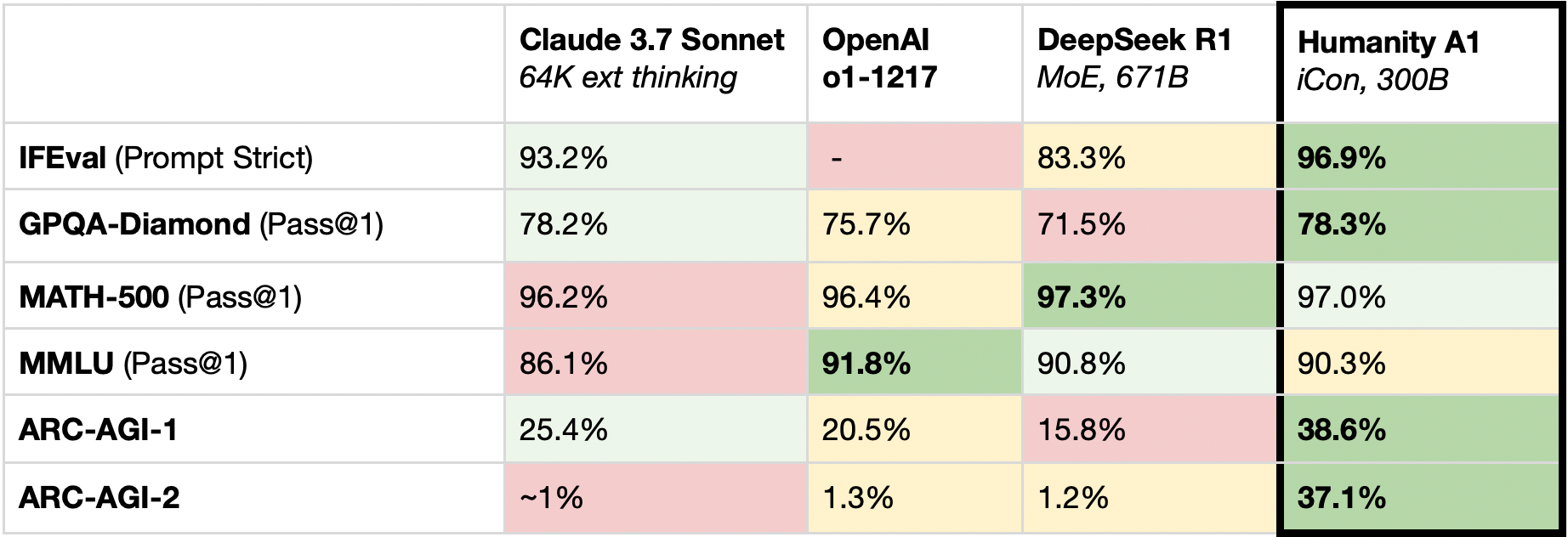

We're proud to share the latest benchmark results for our Humanity A1 model, powered by iCon’s modular hybrid architecture. Despite running on a combined 300B parameter system, Humanity A1 consistently outperformed or matched much larger models*, while operating efficiently on commodity hardware.

Why this matters:

- Superior reasoning: On ARC-AGI-2, widely regarded as one of the most challenging AGI reasoning benchmarks, Humanity A1 achieved 37.1%, dramatically outperforming the sub-2% scores of frontier models.

- Hardware-efficient: All tests were run on MacBook Pros (M4 Max, 128GB RAM), with distributed processing on only two devices for heavier loads like GPQA-Diamond and MATH-500.

- Modular advantage: iCon’s architecture allows us to assemble small, specialized domain experts optimized for reasoning, verification, and task orchestration. This design eliminates much of the inefficiency, overgeneralization, and hallucination found in massive monolithic models.

- Rapid assembly: Humanity A1 was built and tuned for these evaluations in a matter of days, highlighting the agility, scalability, and maintainability that modular systems provide.

*Please note that as of June 2025, these results have not been validated by a third party.

Where we’re headed next:

We did this to prove that our modular AI can go head-to-head with leading AI companies with a lot more money and a lot more compute.

But this is just the beginning. The system tested in these benchmarks is a human-assembled modular AI system. We're working on a much more important development: AI that self-assembles, self-refines, self-learns, and evolves on its own.

Stay tuned for:

- Demo videos showing humanity.ai in action

- Third-party benchmark verification

- Advances in humanity.ai's self-refining & self-evolving capabilities, allowing the system to autonomously build new domain experts when gaps are detected.

If you're a developer, researcher, or AI enthusiast interested in getting involved with our work, feel free to contact us: [email protected]

humanity.ai Newsletter

Join the newsletter to receive the latest updates in your inbox.