Humanity A1 Teaches Itself in New Gesture Demo

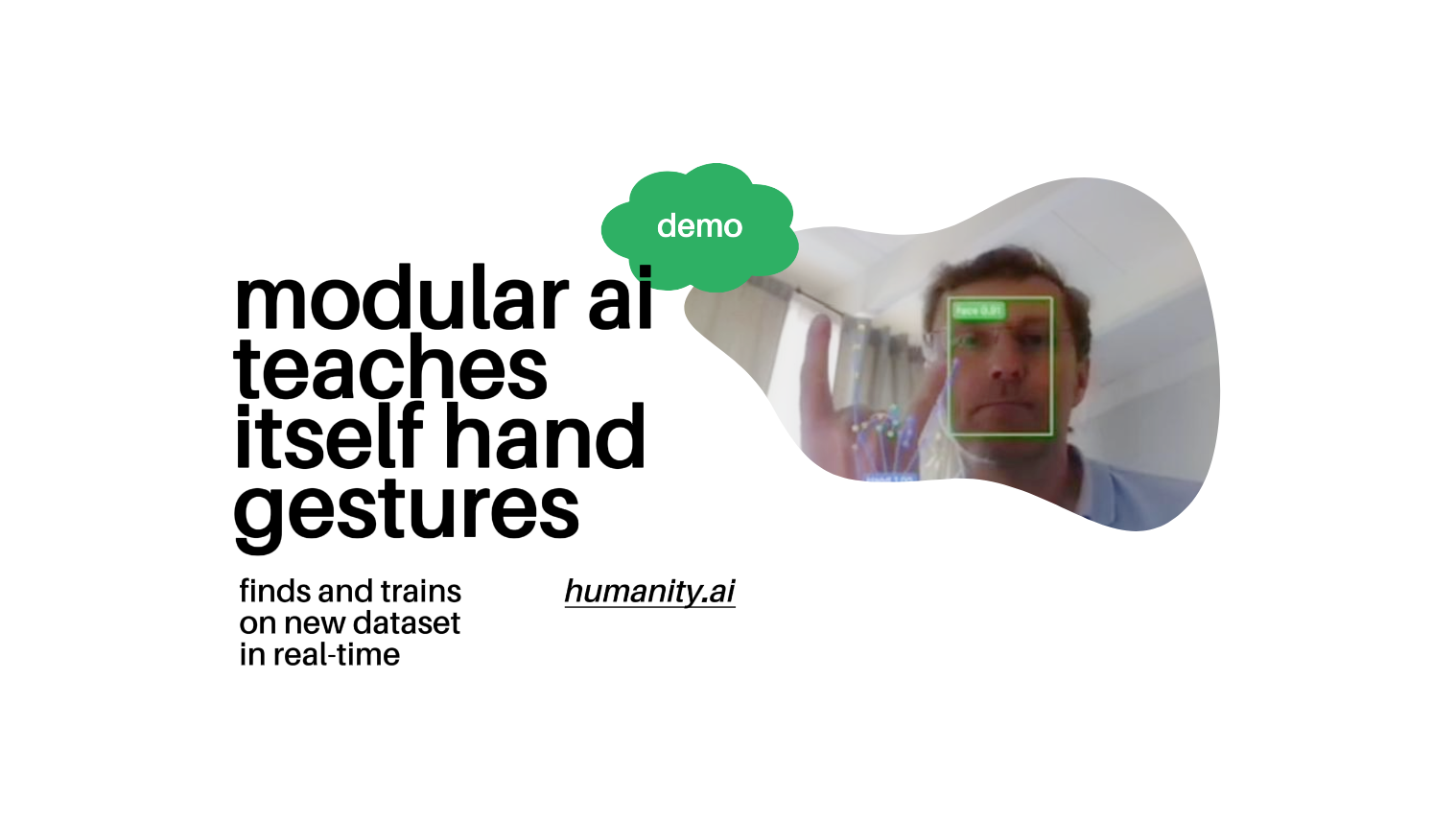

Watch Humanity A1 learn gesture recognition from scratch. In one live session it spawns a new expert, grabs a Kaggle dataset, trains on a 24 GB Mac Mini, and deploys the skill, showing the power of self-teaching modular AI.

From “I don’t know” to “I’ve got it” in one uninterrupted session.

Watch the Demo

What if your AI could recognize when it has a missing skill, go find the data it needs, train itself, and plug the new capability straight into production?

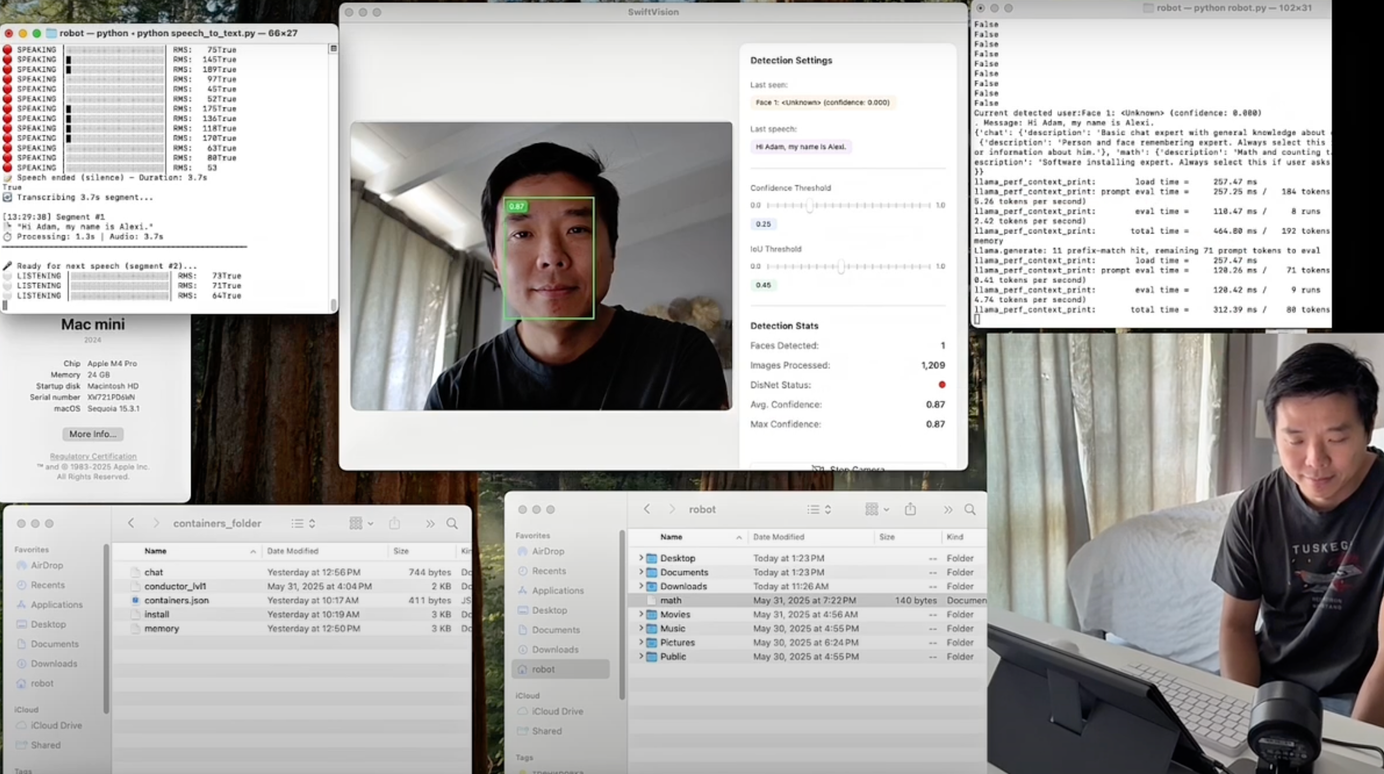

That’s exactly what happens in our latest video, where Evgeniy Usatov (Chariot Technologies Lab’s Head of Patent Strategy) asks Humanity A1—nicknamed “Adam” in this demo—to recognize hand gestures.

The Live Sequence

- Challenge issued.

Evgeniy asks Adam to identify a series of hand gestures. Adam can't do it. He doesn't have the knowledge. - Expert creation.

Evgeniy asks Adam to go learn hand gestures. Adam spins up a blank “Gesture-Expert” container inside the iCon architecture. - Autonomous data hunt.

Adam finds and plugs in a gesture-recognition set from Kaggle. - On-device training.

Using the Mac Mini’s 24 GB of RAM, the new expert fine-tunes a lightweight vision model, logs metrics, and saves checkpoints. - Hot-swap deployment.

The trained weights are wrapped in the container, registered with the Conductor, and verified by the Verification Module. - Skill acquired.

Evgeniy flashes the "peace" sign. Adam nails it, then proceeds to recognize gestures for "call" and "rock" — all in the same session.

Why It Matters

Generative AI chatbots that are popular right now come pre-trained — they don't actually learn new skills in response to the user's needs.

We're working on something entirely different.

This closed-loop learning cycle is the first step toward an AI that can grow like a human apprentice: identify a knowledge gap, study, practice, and deliver—all without disrupting the rest of the system.

Under the Hood

- iCon containers: isolate library versions so nothing conflicts.

- DisNet orchestration: routes requests to the new expert the moment it registers.

- FIONa arithmetic engine: keeps RAM usage low (even while training) by converting control logic into pure math operations.

- Audit trail: every dataset, hyper-parameter, and verification check is logged for reproducibility.

Learn more about our technology here >

What’s Next?

Gesture recognition is only the beginning. We're actively seeking AI researchers and engineers to join our team to help us build self-evolving modular AI capable of human-level intelligence. Contact us at: [email protected]

Sign up for our email newsletter below to get the latest updates delivered to your inbox.

humanity.ai Newsletter

Join the newsletter to receive the latest updates in your inbox.