London Dispatch: Our Self-Evolving AI Goes to School

We did something rare in AI: unlocked open-ended self-supervised learning. From a lean start, our humanity.ai system taught itself school curricula, created subject experts and 1,024 tools, and kept improving. Here are the takeaways we shared at IMOL 2025.

This summer, our team of AI engineers set out to test the self-evolving capabilities of our humanity.ai system by asking it to teach itself an expansive set of school curricula.

The results were heartening, demonstrating robust self-improvement via open-ended, supervised learning. Our findings were so compelling, in fact, that we were asked to share the details of our work at the Intrinsically Motivated Open-Ended Learning Conference at the University of Hertfordshire, just outside of London.

So our founder and CTO Timur Ryspekov, lead AI engineer Artem Kuznetsov, and Head of Patent Strategy Evgeniy Usatov packed up our work and spent this week chatting with conference attendees about our self-evolving AI research.

Now that the conference has concluded, we're sharing our work with the wider world—that's you. Below are key takeaways.

humanity.ai's Success with Open-Ended, Self-Supervised Learning: What to Know

What is the iCon AI architecture?

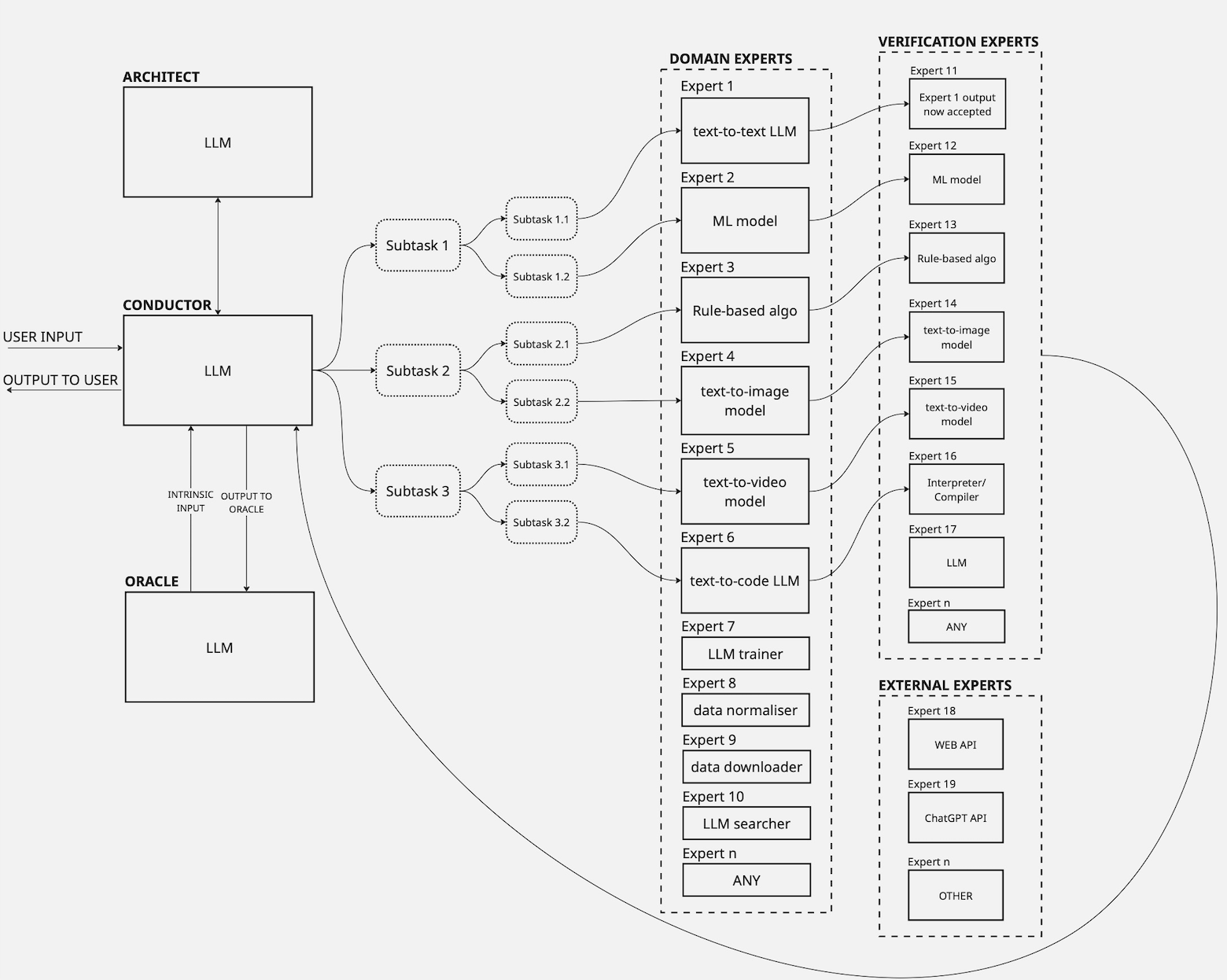

iCon is a modular “interpretable container” architecture designed for continual, self-supervised learning; it adapts to new tasks by refining existing modules and adding new experts. Our humanity.ai system is built using the iCon architecture.

System makeup: a hybrid ensemble of generative/ML/rule-based domain experts, each paired with a verification expert; a Conductor LLM decomposes tasks and compiles outputs. If verification fails or no expert exists, an Architect fine-tunes or creates a new expert; an internal Oracle generates probes to expose knowledge gaps.

The iCon architecture in detail

Self-Learning Experiment Explained

To analyze the system’s self-learning capability, we created a baseline iCon-based system and asked it to teach itself school curricula [Duke University, OER Commons]. Upon execution, the system autonomously expanded to a new state, allowing it to excel at the specific topics contained in the materials.

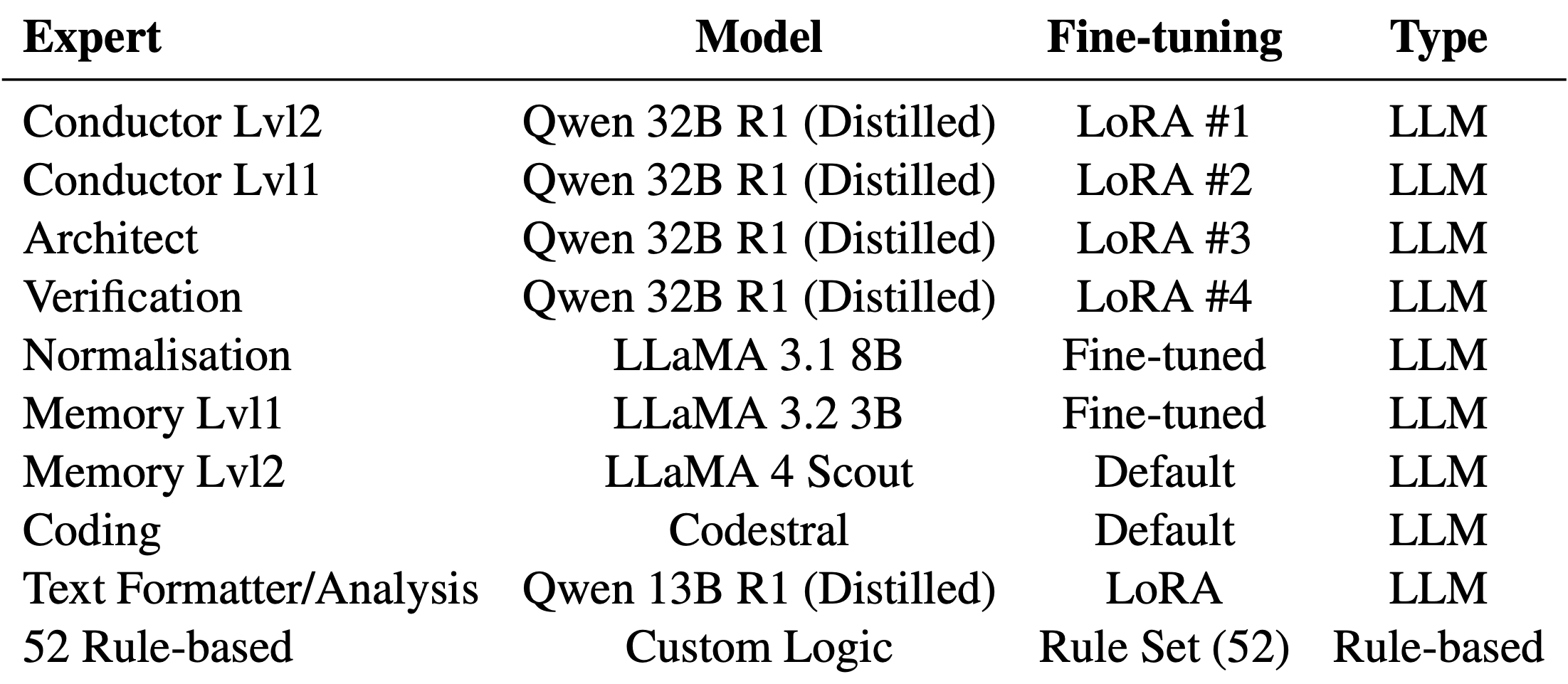

Before Self-Evolution

Self-learning experiment setup (zero-state): Conductor/Architect/Verification built on Qwen-32B (LoRA), normalization and memory experts from LLaMA family, Codestral for coding, a Qwen-13B formatter, plus 52 rule-based utilities.

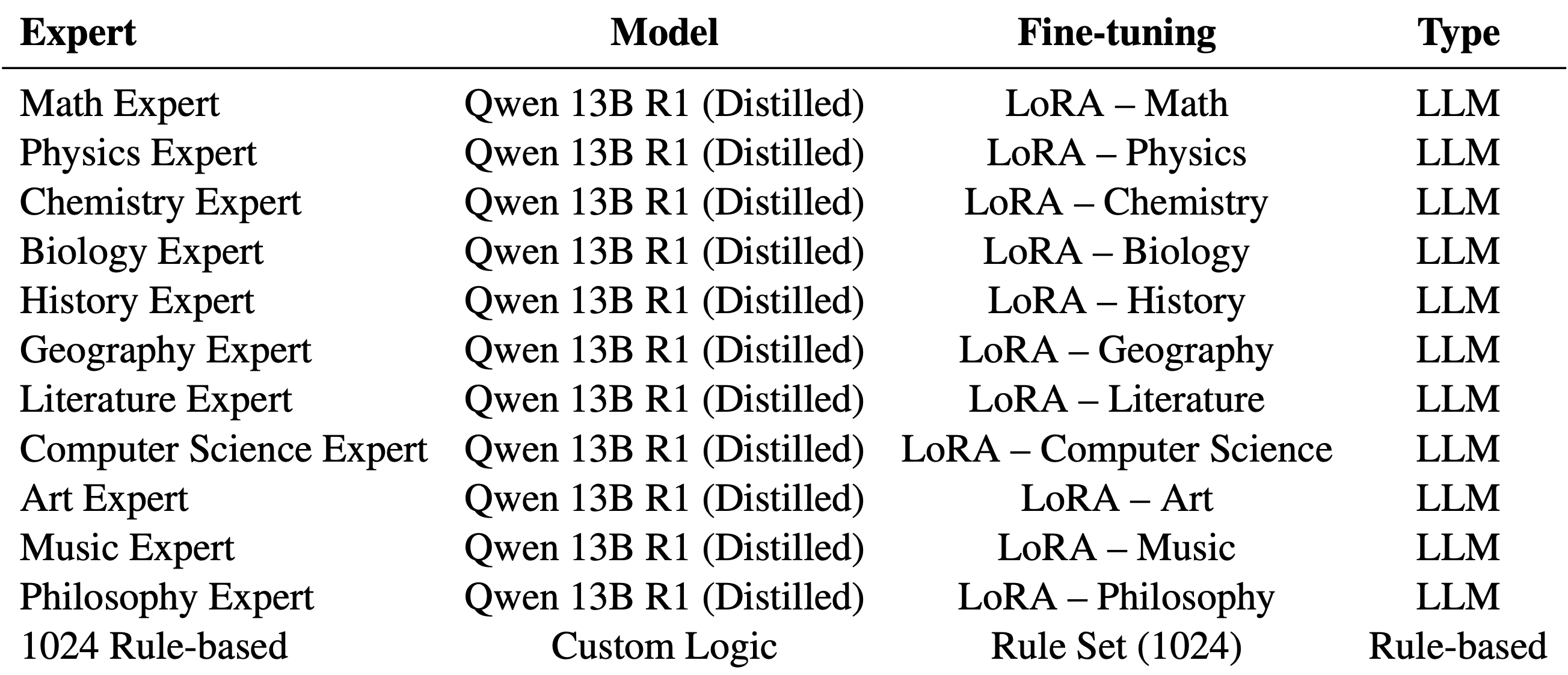

After Self-Evolution

Outcome after autonomous learning: the system expanded itself to subject-specialist experts (math, physics, chemistry, biology, history, geography, literature, CS, art, music, philosophy) and 1024 rule-based functions. It's important to note that the system successfully stayed focused on only the subject matter at hand.

Takeaway

Our humanity.ai system demonstrates self-evolving capabilities through expert refinement and autonomous functional expansion, driven by both intrinsic and extrinsic stimuli. This enables dynamic adaptation, self-correction, and enhancement of internal competencies, supporting open-ended, self-supervised learning and offering a scalable approach to developing next-generation AI.

Want to build self-evolving AI with us?

We're opening our doors to researchers, engineers, advisors and visionary design partners. Shape our self-evolving modular AI, access GPUs & robotics, co-author papers and deploy real-world solutions with us.

humanity.ai Newsletter

Join the newsletter to receive the latest updates in your inbox.