What We're Building

humanity.ai is a self-evolving, modular, multi-agent AI-orchestrated system: Hallucination-proof, hardware-agnostic, and built for trustworthy, cost-smart intelligence anywhere.

What Makes humanity.ai Unique?

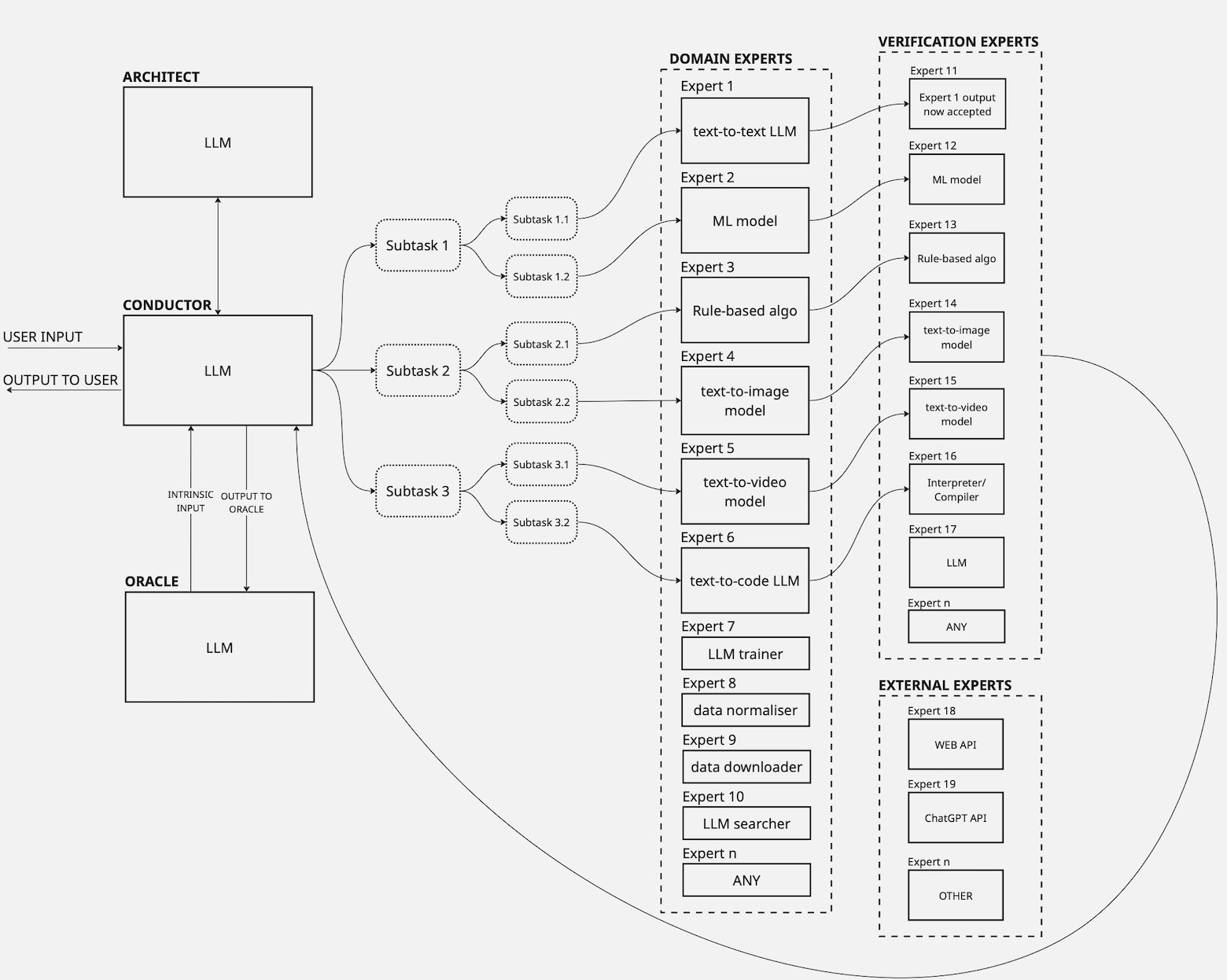

humanity.ai is, at its core, a 4-part system comprised of a Conductor, Verifiers with domain-specific experts, an Architect that arranges refinement of existing domain experts or creation of new ones when the system detects knowledge gaps, and an Oracle that drives intrinsic learning — no human interference required. The result? A self-governing AI-orchestrated (multi-agent) system.

| Feature | Description | Our System |

|---|---|---|

| Self-evolving | Novel architecture enables knowledge refinement and expansion in response to extrinsic and intrinsic motivation | ✅ |

| Modular | AI system composed of self-contained functional modules | ✅ |

| Plug-n-play | Seamless integration of interoperable modules | ✅ |

| Hallucination proof | Built-in iterative verification to ensure accuracy & validity of output | ✅ |

| Hybrid structure | Modules of any type (AI, ML, rule-based, etc.) can be integrated | ✅ |

| Hardware-agnostic | Deployable across all hardware types & heterogeneous setups | ✅ |

| Hot swap in/out | On-the-fly insertion/removal of modules without system disruption | ✅ |

| Distributed computations | Distributes workloads across multiple processing units | ✅ |

| Dynamic use of RAM | Runs on devices with constrained RAM capacity | ✅ |

| Shared context | Shares global context across modular components | ✅ |

| Leverages open-source | Always current with latest advances in open-source community | ✅ |

Benefits & Use Cases

There are three key benefits to the humanity.ai system:

- Proprietary foundational tech allows for highly efficient compute on any processing platform, which is ideal for real-world constraints, such as cost-restrictions, edge/on-prem device compatibility, and even limited global cloud-based compute capacity

- Use of smaller domain-specific experts increases versatility, while the recursive verification process reduces hallucination

- Autonomous self-evolution capabilities enable the AI system to adapt to solve new or unforeseen tasks

There is a wide range of potential use cases for this technology, including but not limited to:

- Cloud-based public advanced AI

- Enterprise-grade on-prem AI or cost-effective personal AI systems

- Compute-efficient distributed AI

- Autonomous robotics and unmanned aerial vehicles with seamless control interface integration

Our Technology

At the core of what makes humanity.ai so powerful is Chariot Technologies Lab's iCon (interpretable containers) architecture (based on a proprietary framework), which allows for the design of efficient AI-orchestrated systems composed of interoperable expert modules of any software type and modality (e.g., generative AI, ML, rule-based algos).

In an iCon-based AI system, the Conductor LLM decomposes user input query into sub-tasks, routes each to the appropriate domain expert, and compiles responses into a system output. If a sub-task fails verification or no suitable expert exists, the Conductor invokes the Architect, which either finetunes the underperforming module or instantiates a new expert to address the capability gap. Additionally, an internal Oracle module generates input prompts designed to expose knowledge gaps, facilitating self-supervised performance enhancement and functional development.

The iCon architecture is made possible by two of Chariot Technologies Lab's prior innovations:

- A patent-protected mathematical method of converting conditional statements into full arithmetic equivalent that allows for accelerated execution of any program code with multiple Boolean conditions as a set of arithmetic operations

- A method of software design and execution (patent-pending), wherein the particular functionality is set up as an interpretable container, configured for interpretation into instructions associated with one or more programming languages, and wherein the instructions enable execution of the particular functionality.

The humanity.ai platform built on this technology can operate multiple LLMs as well as ML-models and rule-based algorithms: a fine-tuned LLM as a Conductor, another open-source model for general knowledge, and 100+ more precise models for math, reasoning, video analysis, and much more, along with verification mechanisms.

Learn more in our blog about modularity as the key to human-level artificial intelligence.