Watch Humanity-A1 Learn New Skills and Build a Social Graph

Watch Humanity-A1 run three AI models concurrently—offline—on a 24GB Mac Mini. This demo showcases modular AI, real-time learning, and verifiable social graph building with just 16B params.

Live demo of learning new skills on the fly and building a local social graph by learning human faces—all offline.

The Chariot Technologies Lab team is thrilled to share a new demo of Humanity-A1, the latest version of our modular AI system, running fully offline on a 24GB Mac Mini.

No internet, no cloud, and no tricks. Just real intelligence in a compact, verifiable, self-contained package.

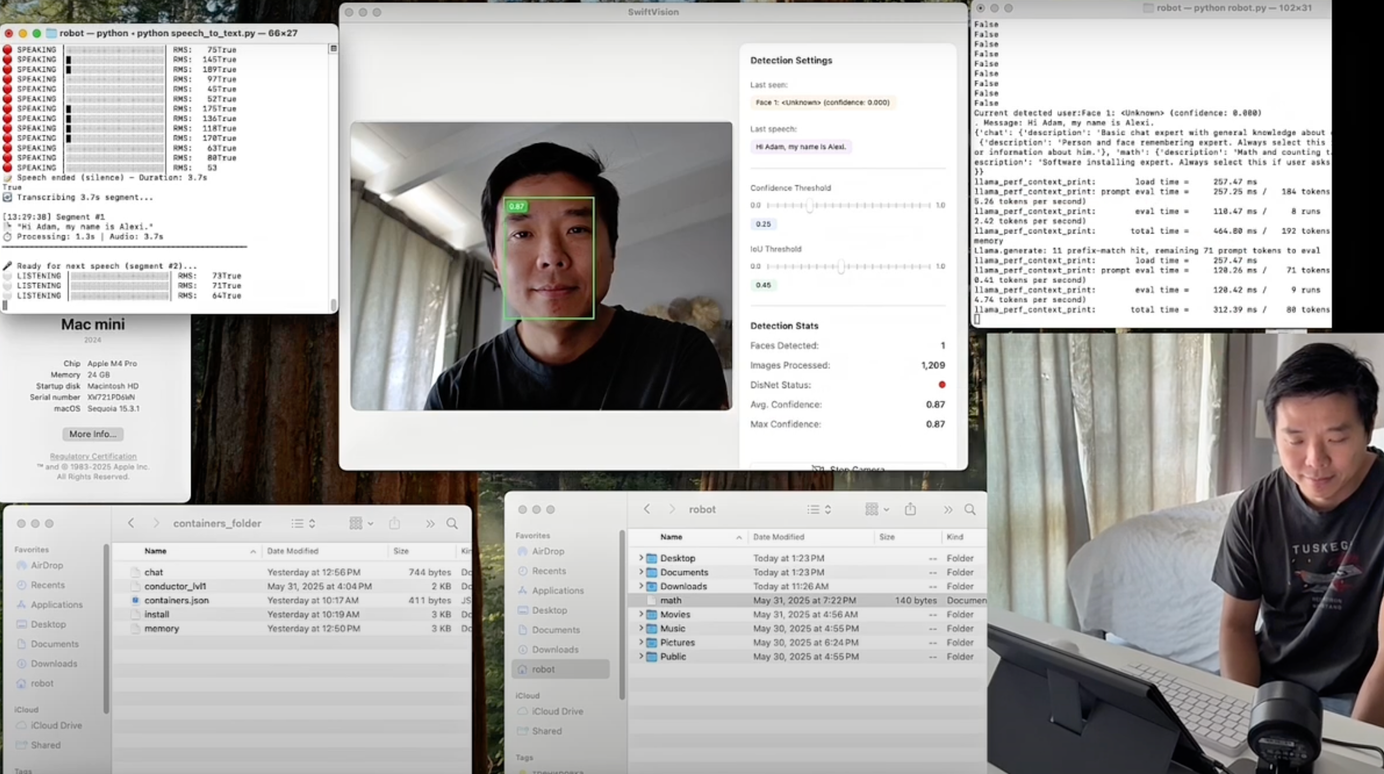

In this video, co-founder Timur's AI friend Adam (running on Humanity-A1) meets our CEO Alexey for the first time and memorizes his face so he can remember him as a friend of Timur's in the future.

When Alexey asks Adam to answer a math problem, Adam informs Alexey that he doesn't know math. So Alexey uploads the skill and voila! Adam knows math. It's like when Neo learns jiu jitsu 😄

Then, Timur enters the picture, and Adam recognizes that his primary user has arrived and greets him.

Two very cool things you'll see

- The system can learn new skills on the fly and incorporate them into its broader intelligence architecture. In this case, it learns how to do math as soon as Alexey (our CEO) adds uploads the new ability.

- The system recognizes and differentiates between two different people in real time, addressing them separately by name. The humanity.ai system can build a local social graph of its primary user and their companions over time, understanding each relationship and communicating uniquely with each acquaintance. And if a stranger enters the picture, the system recognizes that as well.

Watch the Demo

What's in the Demo?

This may look like a clunky prototype (we know the voice model needs some love, and it's not running at breakneck speed...yet), but under the hood, it's showcasing several breakthroughs that redefine what's possible with AI at the edge.

1. Local AI on Consumer Hardware

We’re running three models concurrently—a vision model and two LLMs—on a single Mac Mini with just 24GB of RAM. The combined setup totals 16 billion parameters, which would normally require at least 32GB.

2. Concurrent, Cross-Stack Execution

Each model uses different ML libraries (Whisper, face recognition, text-to-speech, text-to-text), normally incompatible. Our system runs them simultaneously, with no virtualization or wrappers.

3. Plug-and-Play AI Architecture

Thanks to our iCon architecture, any AI or ML model can be “containerized” and added on the fly as an expert module—no retraining required. This modularity is key to real-world scalability.

4. Orchestration + Verification

A built-in Conductor dynamically routes tasks to relevant experts. If no expert is available, the system says so. No hallucinations, no guesswork.

5. Real-Time Learning

The vision model adds new faces to memory on the fly, showing how dynamic training works in real time. This lays the groundwork for lifelong learning, and in this specific case enables the system's ability to create a local social graph of the primary user and acquaintances.

6. Apple Silicon + PyTorch + CUDA

We’re running PyTorch (CUDA-based) models natively on Apple Silicon—another technical milestone that opens the door to more diverse hardware support.

Why This Matters

This isn’t just a proof-of-concept. It’s a glimpse into what modular, private, adaptable AI can look like when it’s untethered from the cloud.

We’re not building an LLM. We’re building a brain—one that evolves, scales, respects user privacy by design, and unlocks a new path to human-level artificial intelligence.

Demo Recap:

- Fully offline system

- Runs on a 24GB Mac Mini

- 3 models, 16B params total

- Real-time learning

- Hardware-agnostic modularity

- Verifiable orchestration

- Multi-face recognition and social graph building

Want to be part of our work?

We're actively seeking AI researchers and engineers to join our team. Get in touch at [email protected] if you're interested, and be sure to sign up for email newsletter below to stay up-to-date with the latest developments.

humanity.ai Newsletter

Join the newsletter to receive the latest updates in your inbox.