Why Modularity is the Missing Key to Human-Level Artificial Intelligence

humanity.ai’s iCon architecture is reimagining AI as a modular, open system, where intelligence evolves one expert at a time. It’s efficient, verifiable, and runs on everyday devices.

Inside the humanity.ai System and the iCon Architecture That Could Change Everything

As the race toward human-level artificial intelligence accelerates, it’s becoming increasingly clear that today’s frontier language models—monolithic giants like GPT-4, Claude, and Gemini—are powerful, but limited. They consume massive resources, hallucinate regularly, and remain black boxes, making them hard to trust.

Enter humanity.ai, a breakthrough from Chariot Technologies Lab built on a completely different foundation: modularity.

Modular AI is an architecture that combines many small, specialised models (‘experts’) under a conductor that verifies each answer before you ever see it.

Rethinking the Framework: The iCon Architecture

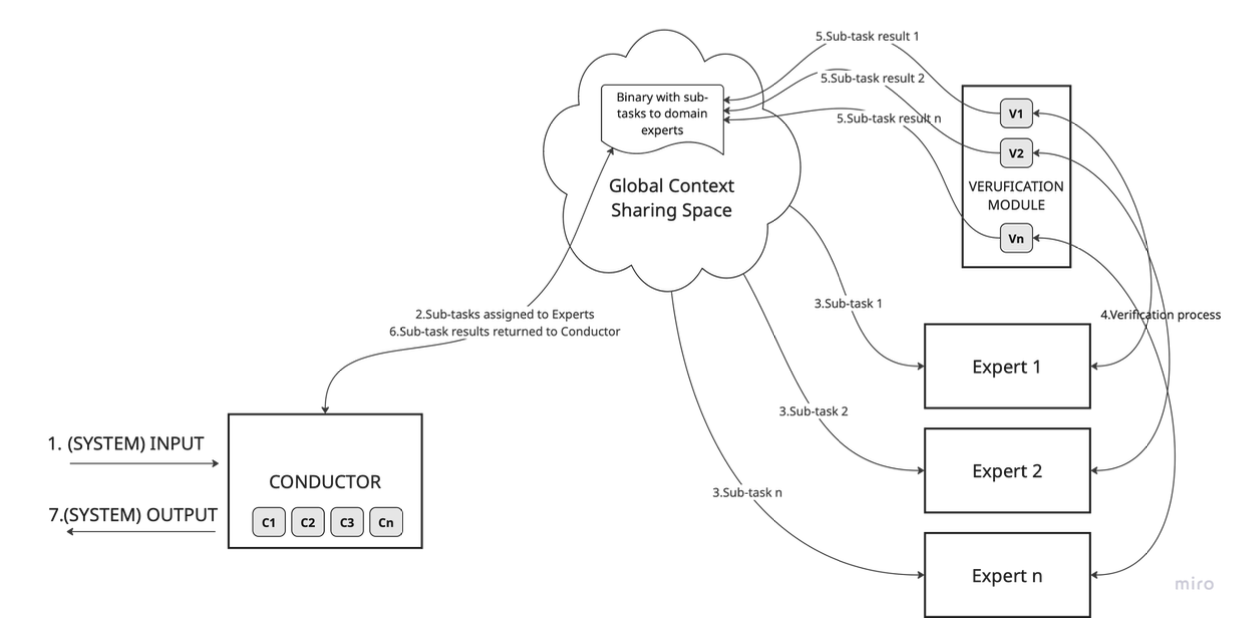

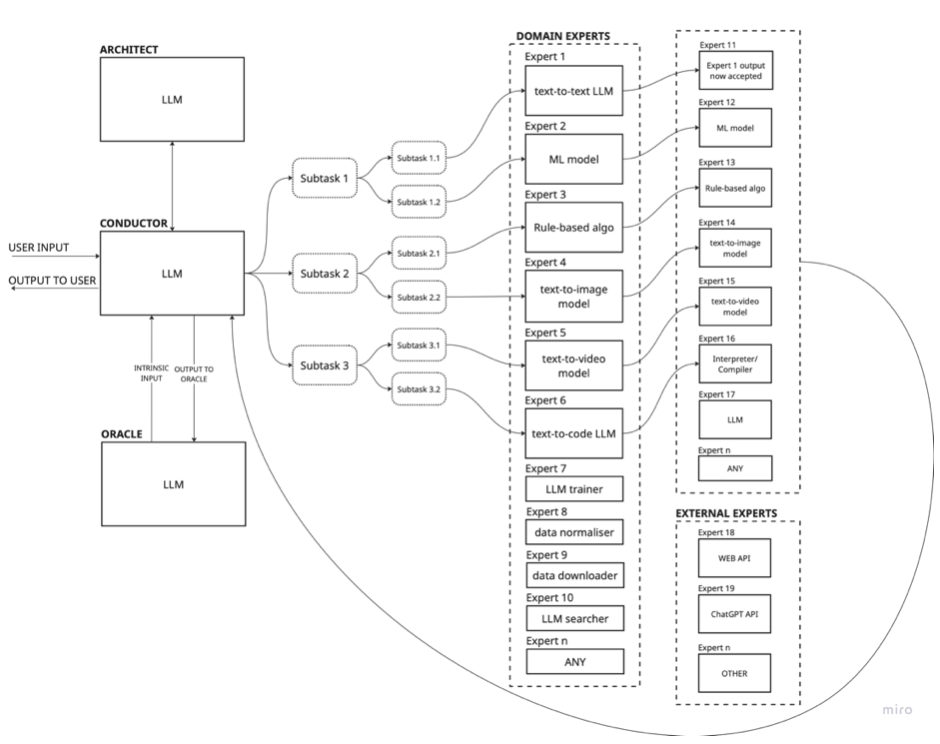

At the heart of the humanity.ai system is a unique architecture called iCon, short for interpretable containers. Rather than training a single large model to do everything, iCon is a composable system of independently developed “experts,” each responsible for specific domains or tasks. These experts are wrapped in standardized containers and orchestrated by a Conductor model, which assigns tasks and verifies outcomes through a nested Verification Module.

Unlike monolithic systems that in many cases light up every neuron for every prompt (or at least more than necessary), iCon selectively activates only the experts needed. The result? Better performance, faster inference, and much lower compute cost.

Real Benchmarks, Real Breakthroughs

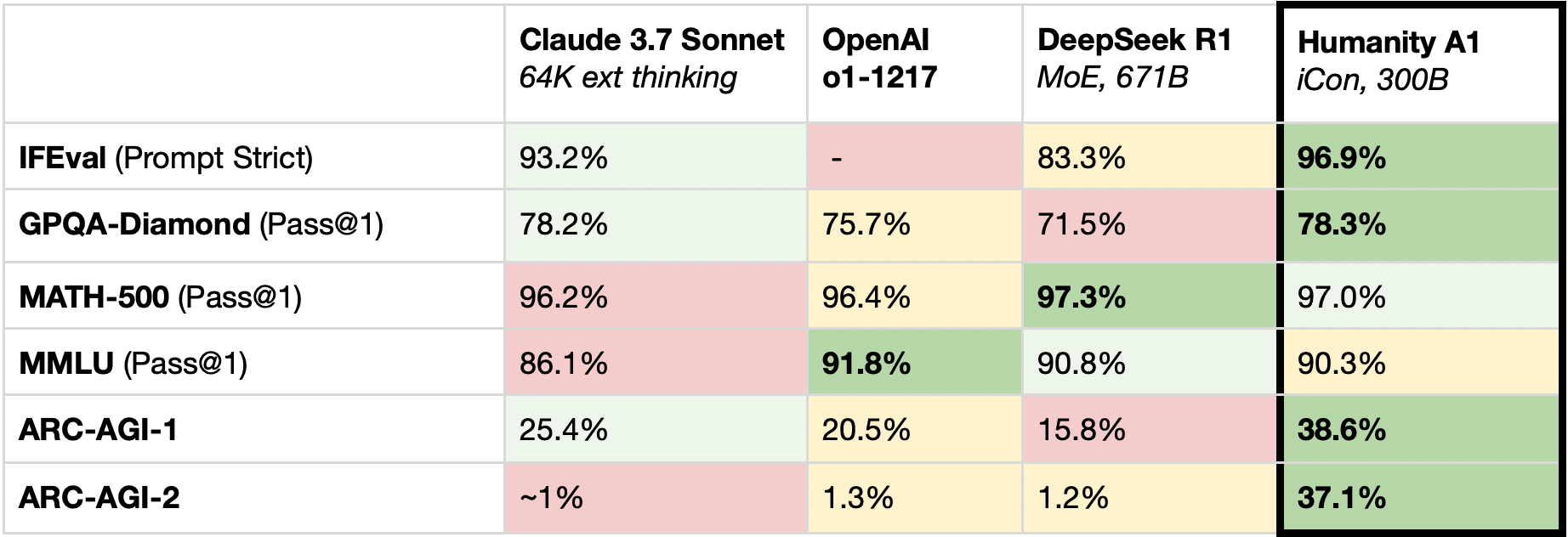

Despite using just 300 billion parameters, humanity.ai outperforms far larger systems on many standard benchmarks:

- ARC-AGI-2: 37.1% (vs. ~1% for Claude and OpenAI)

- GPQA-Diamond: 78.3%

- IF-Eval (Prompt Strict): 96.9%

And these results were achieved on consumer hardware—specifically, a MacBook Pro—demonstrating that high-level AI reasoning doesn't require a data center, and that consumer-grade human-level artificial intelligence is more than doable.

What Makes iCon Different?

- Verification-first AI: Like humans, iCon doesn’t just guess; it checks its work. Outputs are verified by specialized validators before being returned.

- Memory and learning: When errors are caught, the system stores them in memory and uses them to fine-tune the relevant expert module. Over time, it gets smarter autonomously.

- Self-evolving architecture: If no expert exists for a task, iCon can train a new one on the fly, integrate it into the system, and improve itself without human intervention. This unlocks the possibility of self-evolving agents.

- Hardware agnostic: Thanks to our patented method for converting logic into arithmetic operations, iCon systems can run on virtually any device, even iPhones.

Why This Matters for the Future of AI

Monolithic LLMs are already hitting their limits. More parameters no longer mean more intelligence. Meanwhile, the world is shifting toward edge AI, personal AGI, and autonomous robotics—domains that demand modularity, efficiency, and transparency.

iCon is not just an architecture; it's a philosophy. It allows for public, open, decentralized evolution of human-level artifical intelligence: one containerized expert at a time.

And with the humanity.ai system, we’re seeing what that future could look like: smarter, cheaper, safer, and more scalable intelligence.

Interested in Working With Us?

We're actively seeking AI researchers and engineers to work with us. PhD students, entrepreneurs, enthusiasts: Use our platform for research, contribute to our open-source project, test our system, leverage our compute to run experiments.

Contact us about collaborating: [email protected]

FAQ

What is modular AI?

Modular AI replaces one giant model with many small, specialised experts collaborating through an orchestrator—delivering stronger reasoning while using far less compute.

How does iCon verify its outputs?

Every expert’s answer is passed to dedicated verifier agents that cross-check facts, logic, and consistency before the result reaches the user, virtually eliminating hallucinations.

Can iCon run on mobile or edge devices?

Yes. Because each containerised expert loads only when needed and remains lightweight, iCon can operate on consumer laptops and modern smartphones—not just data-centre GPUs.

humanity.ai Newsletter

Join the newsletter to receive the latest updates in your inbox.